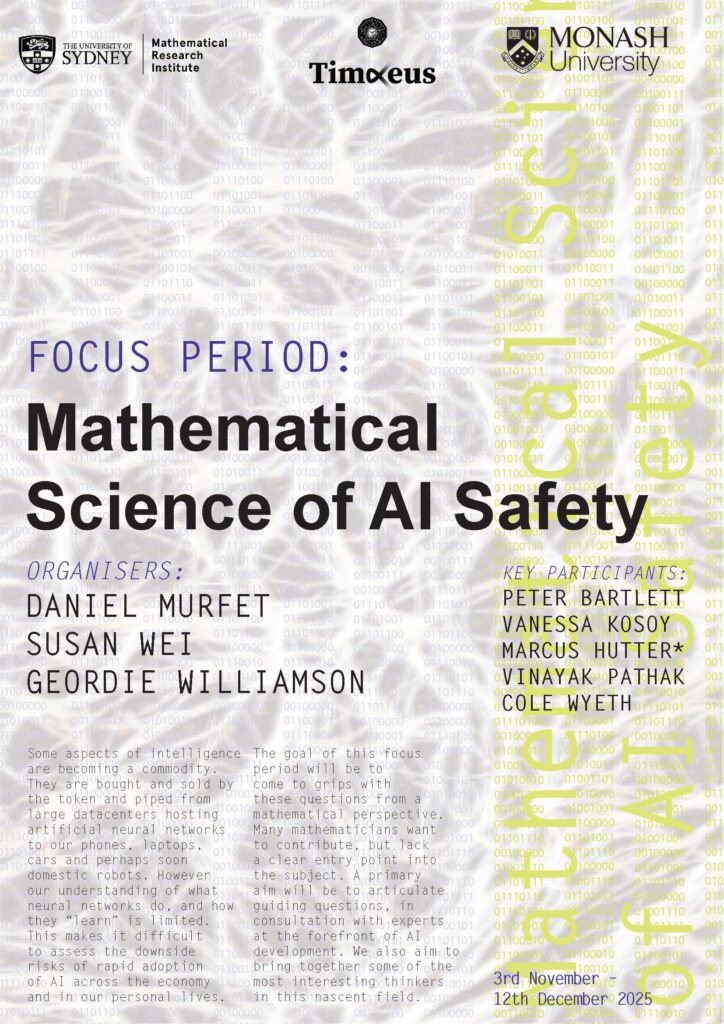

This focus period ran at the Sydney Mathematical Research Institute from November 3rd – December 12th, 2025

Some aspects of intelligence are becoming a commodity. They are bought and sold by the token and piped from large datacenters hosting artificial neural networks to our phones, laptops, cars and perhaps soon domestic robots. However our understanding of what neural networks do, and how they “learn” is limited. This makes it difficult to assess the downside risks of rapid adoption of AI across the economy and in our personal lives. The goal of this focus period will be to come to grips with these questions from a mathematical perspective. Many mathematicians want to contribute, but lack a clear entry point into the subject. A primary aim will be to articulate guiding questions, in consultation with experts at the forefront of AI development. We also aim to bring together some of the most interesting thinkers in this nascent field.

Organisers: Daniel Murfet, Susan Wei & Geordie Wiliamson

Seminars and other sessions from Mathematical Science of AI Safety

‘Introduction to focus period‘

Daniel Murfet, University of Melbourne

Tuesday 4th November, 2025

‘Solomonoff induction: Part 1′

Cole Wyeth, University of Waterloo

Tuesday 6th November, 2025

Q&A with Edmund Lau

Tuesday 11th November, 2025

Edmund Lau is a researcher at the UK AI Security Institute, and has been playing a leading role in the call for research in learning theory in the recent alignment project (https://alignmentproject.aisi.gov.uk/). In this session Dan Murfet will chair a Q&A with Edmund, with the aim of discussing the landscape of technical AI safety research from Edmund’s point of view at the AISI.

‘Vinayak Lecture Series: Part 1′

Vinayak Pathak, University of Waterloo

Thursday 13th November, 2025

‘Universal Algorithmic Intelligence‘

Marcus Hutter, Australian National University/DeepMind

Part 1: Friday 14th November, 2025; Part 2: Monday 17th November

There is great interest in understanding and constructing generally intelligent systems approaching and ultimately exceeding human intelligence. Universal AI is such a mathematical theory of machine super-intelligence. More precisely, AIXI is an elegant parameter-free theory of an optimal reinforcement learning agent embedded in an arbitrary unknown environment that possesses essentially all aspects of rational intelligence. The theory reduces all conceptual AI problems to pure computational questions. After a brief discussion of its philosophical, mathematical, and computational ingredients, I will give a formal definition and measure of intelligence, which is maximized by AIXI. AIXI can be viewed as the most powerful Bayes-optimal sequential decision maker, for which I will present general optimality results. This also motivates some variations such as knowledge-seeking and optimistic agents, and feature reinforcement learning. Finally I present some recent approximations, implementations, and applications of this modern top-down approach to AI.

Q&A with Marcus Hutter and Cole Wyeth

Monday 17th November

Chaired by Daniel Murfet.

‘Ambiline program induction’

Vanessa Kosoy

Tuesday 18th November

A key gap between machine learning theory and practice is that the theory lacks computationally efficient algorithms that learn sophisticated models. I will demonstrate a new approach to constructing such algorithms, drawing on stringology, combinatorics on words and automata theory. Specifically, we will look into the setting of deterministic sequence prediction. In this setting, I discovered “vaguely Kolmogorov-like” complexity measures on words, which admit efficient sequence prediction algorithms with mistake bounds that scale with the complexity measure.

‘Programs as Singularities’

Will Troiani

Tuesday 18th November

Wiener wrote that “for a system to show any effective causality, it must be possible to consider how this system would have behaved if it had been built up in a slightly different way.” This motivates recent work developing a geometric perspective on computation: embedding Turing machines into linear logic, whose differential structure lets us study a machine by examining infinitesimal perturbations of its code. I will explain how this construction turns algorithmic structure into geometric structure, and why this matters for AI safety. The key hypothesis, central to structural Bayesianism, is that the geometry of the KL divergence encodes internal computational structure, so that Bayesian posteriors can in principle “see’’ and favour particular algorithmic structures. This suggests a mathematical path toward understanding and constraining the internal structure of complex models such as neural networks.

‘Lecture 3 on reflective oracles’

Cole Wyeth

Thursday 20th November

‘Singularities and MDL’

Edmund Lau

Thursday 20th November

Edmund will present some recent theoretical work from his PhD thesis, which has appeared in this preprint https://arxiv.org/abs/2510.12077, on extending the MDL principle to “singular” model classes (e.g. where the Fisher information metric is degenerate). In particular there is a two-part code where the learning coefficient, a geometric invariant, controls the code length of the parameter.

‘Mechanistic Interpretability’

Chris Olah

Tuesday 25th November

Chris is one of the founders of the field of mechanistic interpretability, one of the co-founders of Anthropic and leads the interpretability team there (https://colah.github.io/about.html). He’ll speak on work he and his lab are doing to understand how neural networks do what they do, and how they are applying this to make progress on problems in AI safety.

‘Nonrealizable learning theory via imprecise probabilities’

Vanessa Kosoy

Thursday 27th November

Except for toy problems, almost every real-world phenomenon is nonrealizable (i.e. lies outside the effective hypothesis class of your learning algorithm) or misspecified (i.e. not absolutely continuous w.r.t. your Bayesian prior). This is an inherent consequence of the computational complexity of the world. Traditionally, learning theory uses one of two approaches to deal with this complication: agnostic learning (convergence to minimal-error-in-class model), and adversarial regret bounds (convergence to best-in-class-in-hindsight policy). However, both approaches have substantial shortcomings. Imprecise probability is a decision-theoretic framework for modeling uncertainty that goes beyond classical probability theory. We will show how introducing imprecise probability into learning theory produces an alternative/complementary approach to nonrealizability with important benefits.

The talk will be mostly based on the papers Imprecise Multi-Armed Bandits and Robust Online Decision Making by Alex Appel and myself.

‘Robust linear multi-armed bandits’

Vanessa Kosoy

Tuesday 2nd December

I will talk about the theory of robust linear multi-armed bandits, which is a generalization of stochastic linear multi-armed bandits that incorporates imprecise probability theory. If there is time, I might also talk about the theory of decision-estimation coefficients (developed by Foster, Qian, Rakhlin, Han and others) and its extension to robust decision-making (by Appel and myself).

‘In-Context Linear Regression’

Tuesday 2nd December

‘Newcomb’s paradox and reinforcement learning’

Vanessa Kosoy

Thursday 4th December

The problem of counterfactuals, and the related problem of free will, are philosophical puzzles that stand in the way of fully understanding rational decision-making. These are especially apparent in thought experiments like Newcomb’s paradox, in which the agent is forced to regard their own actions as predictable. At the same time, predictability of actions is a central ingredient of rationality in multi-agent systems and self-modifying agents. In this talk, I will introduce supradistributions (a fuzzy version of credal sets) and will show how viewing the problem through the lens of reinforcement learning with supra-POMPDs dissolves the confusion (or at least reduces it).

‘Informal talk on susceptibilities and ablations’

Andy Gordon

Thursday 4th December

‘Susceptibility UMAP show and tell’

Andy Gordon

Thursday 4th December

‘Singular learning theory for reinforcement learning’

Chris Elliott

Friday 5th December

Chris will speak on recent work done in collaboration with a team at Timaeus (Einar Urdshals, David Quarel, Matthew Farrugia-Roberts, Edmund Lau and DM) applying Watanabe’s ideas in the context of RL. He’ll talk about some of the conceptual challenges involved as well as present experiments validating the use of the learning coefficient in this setting.

‘Making sense of alignment agendas’

Monday 8th December

‘Formal computational realism: a mathematical framework for agent theory with metaphysical realism’

Vanessa Kosoy

Tuesday 9th December

Classical theories of agents such as AIXI and reinforcement learning ascribe to the agent beliefs and goals grounded in the “cybernetic” ontology of actions and percepts. This is reminiscent of logical positivism in analytic philosophy. However, both the epistemology of science and the theory of AI alignment require metaphysical realism, i.e. grounding in objective reality. Formal Computational Realism (FCR) is a novel mathematical framework for agent theory which takes a metaphysical realist perspective. Specifically, FCR holds that objective reality consists of _information about computable logical facts_, a position broadly similar to Ontic Structural Realism in analytic philosophy (but with rigorous mathematical formalization). In this talk, I will explain the motivation and some mathematical elements of FCR.

‘Sampling from degenerate loss landscapes’

Ronan Hitchcock

Tuesday 9th December

There is empirical evidence that stochastic gradient MCMC samplers (such as SGLD) can measure local geometric invariants of the loss function for very large neural networks. From a theoretical perspective, this is poorly understood and is, in several respects, surprising. In this talk, I will focus on the problem of characterising the local neighbourhood that a sampling algorithm explores, with particular attention to asymptotic expansions of exit times. I propose a general approach via potential theory, outlining some important subproblems, and then derive asymptotic expansions of exit times for two special classes of loss function. I conclude with a discussion of how these results relate to the question “Is training Bayesian learning?”, and attempt to restate this question more precisely.

‘SLT basics and the real log canonical threshold’

Simon Pepin Lehauller

Tuesday 9th December

Simon will give an introductory talk detailing the connections between algebraic geometry, specifically the real log canonical threshold, and statistical learning theory.

‘A Bayesian Predictive View of Transformers’

Susan Wei

Tuesday 9th December

(This is the same talk I will give at the Sydney Data Science workshop on Friday morning.) Transformers meta-trained on “Bayes-filtered” data serve simultaneously as a controllable “sandbox” for understanding the phenomenon of in-context learning and as a flexible engineering tool for modern Bayesian inference. This talk bridges these two perspectives using Bayesian Predictive Inference (BPI). I will demonstrate how we can interrogate a transformer’s latent beliefs by autoregressively “rolling out” its predictions. I will also demonstrate how to recover epistemic uncertainty from these meta-trained models, even when the posterior distribution is not explicitly represented. Finally, I will discuss TabMGP, a method for constructing valid martingale posteriors using these foundation models as the predictive engine.

Dean’s Lecture Series with Prof Shafi Goldwasser

Shafi Goldwasser

Wednesday 10th December

For decades now cryptographic tools and models have at their essence transformed technology platforms controlled by worst case adversaries to trustworthy platforms. This talk will describe how interactive proofs and cryptographic modeling can be used to build trust in legal domains and various phases of the machine learning pipelines, including verification of LLM outputs.

Special SMRI Seminar, ‘Gradient optimization methods: the benefits of instability’

Peter Barlett, UC Berkeley

Wednesday 10th December

Deep learning, the technology underlying the recent progress in AI, has revealed some major surprises from the perspective of theory. These methods seem to achieve their outstanding performance through different mechanisms from those of classical learning theory, mathematical statistics, and optimization theory. Optimization in deep learning relies on simple gradient descent algorithms that are traditionally viewed as a time discretization of gradient flow. However, in practice, large step sizes – large enough to cause oscillation of the loss – exhibit performance advantages. This talk will review recent results on gradient descent with logistic loss with a step size large enough that the optimization trajectory is at the “edge of stability.” We show the benefits of this initial oscillatory phase for linear functions and for multi-layer networks.

Based on joint work with Pierre Marion, Matus Telgarsky, Jingfeng Wu, and Bin Yu.

‘AI policy and governance Q&A’

Beba Cibralic

Thursday 11th December

Beba is a researcher at RAND (but speaking to us in her private capacity) in the USA working on AI governance, ethics, and safety, originally from Australia. Dan will have questions prepared but bring your own questions about the broader context of AI governance, regulation and geopolitics.

‘Formal computational realism: Part 2’

Vanessa Kosoy

Thursday 11th December

Classical theories of agents such as AIXI and reinforcement learning ascribe to the agent beliefs and goals grounded in the “cybernetic” ontology of actions and percepts. This is reminiscent of logical positivism in analytic philosophy. However, both the epistemology of science and the theory of AI alignment require metaphysical realism, i.e. grounding in objective reality. Formal Computational Realism (FCR) is a novel mathematical framework for agent theory which takes a metaphysical realist perspective. Specifically, FCR holds that objective reality consists of _information about computable logical facts_, a position broadly similar to Ontic Structural Realism in analytic philosophy (but with rigorous mathematical formalization). In this talk, I will explain the motivation and some mathematical elements of FCR.

‘Math of Psychometrics & Evaluations’

Liam Carroll

Thursday 11th December

‘AI alignment requires mathematical and philosophical rigour: the challenge and the path forward’

Vanessa Kosoy

Friday 12th December

The possibility of unaligned artificial superintelligence is the greatest danger facing human civilization in our era, perhaps even in all of history. In the long-term, the only way to avoid this danger is (arguably) creating a human-aligned artificial superintelligence. However, the latter would require us to overcome a series of difficult technical challenges, for which our current state of knowledge in insufficient. Overcoming these challenges requires conceptual breakthroughs in our understanding of intelligent agency, conforming to strict standards of mathematical and philosophical rigour. In this talk, I will both outline the challenges and propose a path towards addressing them. We will also discuss how the research directions I discussed in my previous lectures fit into this agenda.

‘Singularities, structure and learning beyond the RLCT’

Simon Pepin Lehalleur

Friday 12th December

SLT presents a clear picture of learning for Bayesian parametric models in the large data limit, showing that the main “sufficient statistic” of the data-generating process controlling the asymptotics of posterior concentration and generalisation error is the RLCT of the KL divergence K(w). In this talk, I will largely sidestep the important question of what this can tell us about deep learning, and explore further three aspects of this link between learning and singularities.

First I will discuss how Watanabe proves in fact a more precise (but complicated) asymptotic expansion of the Bayesian posterior, with lower-order terms incorporating finer geometric invariants of the singularities of K(w). This expansion can be described either in terms of posterior expectations of individual observables or in terms of Schwarz distributions. Understanding this expansion seems important to ground theoretically generalisations of LLC estimation like susceptibilities.

Second, SLT in itself does not tell us how the computational structure of the data-generating process is reflected in the geometry of K(w). I will discuss a few results and some open questions around this central problem.

Finally, I will explain a more “dynamical” interpretation of the RLCT in terms of the geometry of jet schemes of K(w).