See the calendar below for future seminars and events.

Following every Thursday seminar, attendees are welcome to come to one of our SMRI Afternoon Teas which take place on Thursday afternoons at 2pm on the Quadrangle Terrace, accessed through the entry in Quadrangle Lobby P and via the SMRI Common Room on level 4.

Upcoming and current events: seminars, workshops and course

The Friday Endoseminar, ‘Computing modular forms’

Dates and times: Friday 20th February and Friday 27th February, 2 pm – 4 pm

Location: SMRI Seminar Room (A12 Macleay Room 301)

Speaker: John Voight

Abstract: In these lectures, we will explain what it means to compute modular forms. In the first half of the first lecture, we will study modular curves arising from the quotient of the upper half-plane by congruence subgroups of SL_2(ZZ). We abstract the notion of a path with end points on the boundary to modular symbols, and we recover modular forms through the action of Hecke operators (interpreted as “averaging”). In the second half of the first lecture, we reinterpret modular forms as (co)homology classes, providing a general definition (beyond GL_2). In the second lecture, we discuss algorithmic applications of the general definition: first to algebraic modular forms where the upper half plane has been replaced by a finite set of points, and second to a generalized notion of modular symbols.

Notes are available here.

Seminar on Canonical Bases in Representation Theory

Dates and times: Wednesdays from 10 am –12 pm for the seminar, followed by a weekly exercise session from 1 pm –2 pm, starting from Wednesday 4th March, 2026

Location: SMRI Seminar Room (A12 Macleay Room 301)

Details: In this seminar, we aim to study the answers of the following motivating questions regarding irreducible representations of semisimple Lie algebras and related structures:

- How can we compute their characters?

- How can we compute tensor product decompositions?

- What are “canonical bases” for these modules?

A powerful tool introduced in the early 1990s, known as the canonical basis (Lusztig) or crystal basis (Kashiwara), provides a model to answer these questions.

We will start by learning about prototypical constructions that serve as motivation, before proceeding to the construction and properties of these modern bases: first studying Lusztig’s approach, and then Kashiwara’s approach.

This seminar will focus on the main ingredients and recipes used to motivate, construct and describe these bases: Kazhdan-Lusztig bases, Gelfand-Tsetlin bases, Lusztig’s algebraic construction, Lusztig’s geometric/topological construction, and Kashiwara’s crystal/global bases. More information on the Canonical Bases in Representation Theory website.

SMRI Seminar

Date and time: Thursday 26th February, 1 pm – 2 pm

Location: SMRI Seminar Room (A12 Macleay Room 301)

Speaker: Jakob Zech, Heidelberg University

International Day of Mathematics Events:

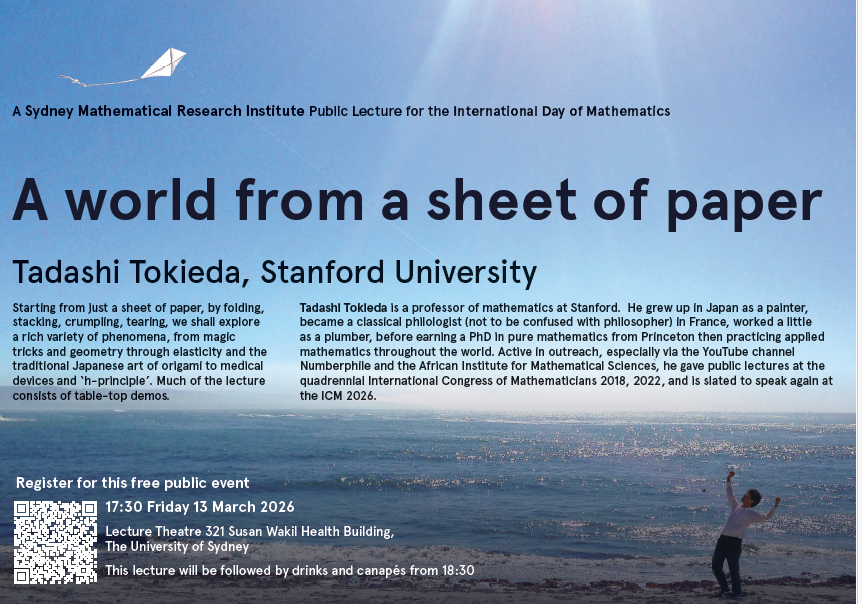

Public Lecture: ‘A world from a sheet of paper’ by Tadashi Tokieda

Speaker: Tadashi Tokieda, Stanford University

Date and time: Friday 13th March 2026, 5:30 pm – 6:30 pm, followed by light refreshments

Location: Lecture Theatre 321, SWHB, The University of Sydney

Abstract: Starting from just a sheet of paper, by folding, stacking, crumpling, tearing, we shall explore a rich variety of phenomena, from magic tricks and geometry through elasticity and the traditional Japanese art of origami to medical devices and ‘h-principle’. Much of the lecture consists of table-top demos.

This public lecture is hosted by the Sydney Mathematical Research Institute as part of our program for International Day of Mathematics/ Pi Day. The talk will be tailored to a general audience and suitable for individuals from Year 10 onward. This is a free event, however registration is essential.

Maths at the Museum

To celebrate International Day of Mathematics, the Sydney Mathematical Research Institute (SMRI) will take over the Chau Chak Wing Museum (CCWM) with fun family activities across the weekend. The official theme of the International Day of Mathematics in 2026 is “Mathematics and Hope”. Across the weekend we will explore mathematics with talks, panels, children’s activities in our Maths Craft Room and performances across the weekend in the Chau Chak Wing Museum. An integrated program of activities will run across the weekend. More details to come, keep an eye on the SMRI event page: Maths at the Museum.

Date and time: Saturday 14th and Sunday 15th March, 12 pm – 4 pm

Location: Chau Chak Wing Museum, The University of Sydney